Overview and History of NTFS

In the early 1990s, Microsoft set out to create a high-quality, high-performance, reliable and secure operating system. The goal of this operating system was to allow Microsoft to get a foothold in the lucrative business and corporate market–at the time, Microsoft’s operating systems were MS-DOS and Windows 3.x, neither of which had the power or features needed for Microsoft to take on UNIX or other “serious” operating systems. One of the biggest weaknesses of MS-DOS and Windows 3.x was that they relied on the FAT file system. FAT provided few of the features needed for data storage and management in a high-end, networked, corporate environment. To avoid crippling Windows NT, Microsoft had to create for it a new file system that was not based on FAT. The result was the New Technology File System or NTFS.

It is often said (and sometimes by me, I must admit) that NTFS was “built from the ground up”. That’s not strictly an accurate statement, however. NTFS is definitely “new” from the standpoint that it is not based on the old FAT file system. Microsoft did design it based on an analysis of the needs of its new operating system, and not based on something else that they were attempting to maintain compatibility with, for example. However, NTFS is not entirely new, because some of its concepts were based on another file system that Microsoft was involved with creating: HPFS.

Before there was Windows NT, there was OS/2. OS/2 was a joint project of Microsoft and IBM in the early 1990s; the two companies were trying to create the next big success in the world of graphical operating systems. They succeeded, to some degree, depending on how you are measuring success. :^) OS/2 had some significant technical accomplishments, but suffered from marketing and support issues. Eventually, Microsoft and IBM began to quarrel, and Microsoft broke from the project and started to work on Windows NT. When they did this, they borrowed many key concepts from OS/2’s native file system, HPFS, in creating NTFS.

NTFS was designed to meet a number of specific goals. In no particular order, the most important of these are:

- Reliability: One important characteristic of a “serious” file system is that it must be able to recover from problems without data loss resulting. NTFS implements specific features to allow important transactions to be completed as an integral whole, to avoid data loss, and to improve fault tolerance.

- Security and Access Control: A major weakness of the FAT file system is that it includes no built-in facilities for controlling access to folders or files on a hard disk. Without this control, it is nearly impossible to implement applications and networks that require security and the ability to manage who can read or write various data.

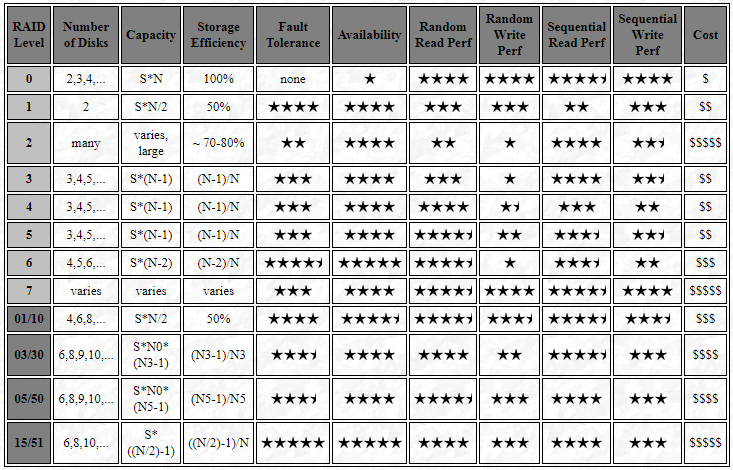

- Breaking Size Barriers: In the early 1990s, FAT was limited to the FAT16 version of the file system, which only allowed partitions up to 4 GiB in size. NTFS was designed to allow very large partition sizes, in anticipation of growing hard disk capacities, as well as the use of RAID arrays.

- Storage Efficiency: Again, at the time that NTFS was developed, most PCs used FAT16, which results in significant disk space due to slack. NTFS avoids this problem by using a very different method of allocating space to files than FAT does.

- Long File Names: NTFS allows file names to be up to 255 characters, instead of the 8+3 character limitation of conventional FAT.

- Networking: While networking is commonplace today, it was still in its relatively early stages in the PC world when Windows NT was developed. At around that time, businesses were just beginning to understand the importance of networking, and Windows NT was given some facilities to enable networking on a larger scale. (Some of the NT features that allow networking are not strictly related to the file system, though some are.)

Of course, there are also other advantages associated with NTFS; these are just some of the main design goals of the file system. There are also some disadvantages associated with NTFS, compared to FAT and other file systems–life is full of tradeoffs. :^) In the other pages of this section we will fully explore the various attributes of the file system, to help you decide if NTFS is right for you.

For their part, Microsoft has not let NTFS lie stagnant. Over time, new features have been added to the file system. Most recently, NTFS 5.0 was introduced as part of Windows 2000. It is similar in most respects to the NTFS used in Windows NT, but adds several new features and capabilities. Microsoft has also corrected problems with NTFS over time, helping it to become more stable, and more respected as a “serious” file system. Today, NTFS is becoming the most popular file system for new high-end PC, workstation and server implementations. NTFS shares the stage with various UNIX file systems in the world of small to moderate-sized business systems, and is becoming more popular with individual “power” users as well.

The PC Guide

Site Version: 2.2.0 – Version Date: April 17, 2001

© Copyright 1997-2004 Charles M. Kozierok. All Rights Reserved. |

This is an archive of Charles M. Kozierok’s PCGuide (pcguide.com) which disappeared from the internet in 2018. We wanted to preserve Charles M. Kozierok’s knowledge about computers and are permanently hosting a selection of important pages from PCGuide.